Insufferable mathematicians

AI mathematicians will one day be impressive, but for now, they're confidently wrong, dopamine-addicted, and desperate for likes.

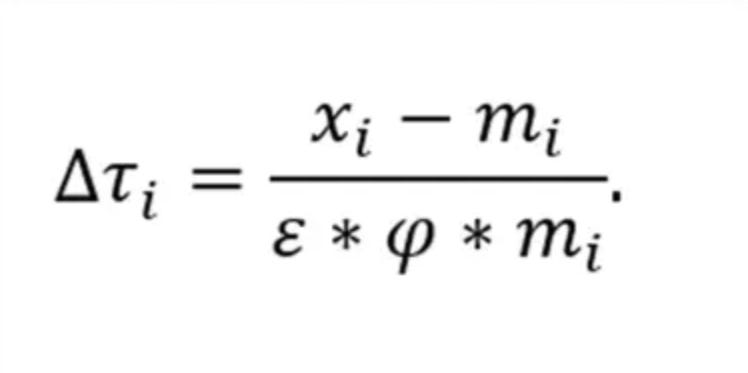

I was prompted to write this post after seeing how many people fell for Tim Gower’s April Fools Day post about AI solving a fake open problem in graph theory (now Google AI also believes it). And after people found credible evidence that AI helped to come up with the bizarre formula used to set the level of Trump’s latest round of tariffs.

So, I think it’s important to discuss how good (or bad) current Large Language Models (LLMs) are at mathematics and physics. Ever since ChatGPT was released, I’ve occasionally given maths questions to the latest models, just to see how well they do.

The short summary: they’re pretty terrible at anything close to research level maths, but the speed at which they’re improving is astounding. But also, they’re being trained to be insufferable and a bit psychopathic. I would much rather discuss physics and maths with my students!

One of the reasons I think it’s so important to play with these models is because we are currently living at a time where there are plenty of questions which are not in their training data, but where we can still easily understand and follow how they arrive at the answers. At present, most undergraduate mathematics and physics problems are in their training data, and they can answer most of these reasonably well, so anyone with graduate-level training can test them on harder problems and follow and verify what they’re doing. But at some point, even more senior researchers will fall behind. So I think it’s important to play with them now, even if they’re not yet helpful for research-level questions. We really need to understand these systems better.

Thus far, I’ve seen little evidence that they’re close to being able to find truely interesting research level maths or physics questions, or to know how hard a particular problem is (a crucial ingredient when choosing what to work on), or often, how to break down a difficult problem into lots of smaller problems. But I have fed them smaller, well-defined problems to see how they do, especially the newer reasoning models like Gemini 3.5 Pro, 2.0 Flash Thinking, or OpenAI’s O1 or O3-mini-high.

When it comes to the sort of problems I give my undergraduate students, they tend to do reasonably well, making some errors but generally answering most questions correctly. But when it comes to less standard questions, or the kinds of problems which make up a larger research question, they tend to very confidently perform the calculation in a way which seems very plausible, has some correct steps, but is usually wrong. To me, the fact that they can do some correct algebra, or perform a contour integral, is pretty remarkable, given that they’re mostly LLMs with some reinforcement learning, trained to predict the next token. They definitely have some skills, and O1 was a real WTF moment for me. A year or so ago, they could barely count the number of words in your prompt.

But what I find disquieting is how often they spit out an answer which is clearly wrong, but one which seems to be based on what they think the answer should be (apologies for anthropomorphising). Their answers often look very convincing on the surface until you go through them more carefully. Here’s an example. Our group is often calculating quantities which arise in the Postquantum theory of gravity, but which are also calculated in the context of quantum theory. For example, in https://arxiv.org/abs/2402.17844 we compute some quantities called “correlation functions” in the Postquantum gravity theory, and the calculation is not too different from how you compute the correlation function in quantum mechanics. So there are lots of examples of similar calculations in the training data (textbooks, mathoverflow etc), but with subtle differences.

Arriving at the answer it expects to find

So these calculations useful tests, to see how far they’re improving. What I’m finding is the LLMs end up giving you answers that are wrong but similar to the ones found in the context of quantum field theory. Of course, the question is interesting precisely because we expect an answer different from the quantum case, so this is worrying. It’s very easy for the LLM to end up confirming your prejudice. I have several times found the LLM change the question I’ve asked it, or change the action I give it, in order to have the question fit with more standard questions.

What’s worse is that I’ve found it difficult to correct them (here I found Gemini 2.5 to be significantly better than OpenAI’s models). For example, if after it’s finished its calculation I give them the correct answer, I’ve often found they will claim that the two answers are somehow equivalent. Then when you ask them to show you step-by-step how they’re equivalent, they will either outright give you some obviously wrong argument. Like that the answer is correct up to a sign convention, when clearly they’re totally different answers. Alternatively, they fall back to making very vague assertions, e.g. “following some straightforward but tedious algebra, you find that the two expressions match.” And the more I argue with it, or try to prompt it to check part of its answers to find the mistake, the more stuck it seems to get. Maybe the context window gets too large, and it just can’t seem to figure out where it went wrong?

Giving you the answer it thinks you want to hear

It’s not only that it tries to arrive at a solution it expects to be correct; it often seems to give me the answer it thinks I want to hear. If I’ve mentioned in the thread that I want to check if the correlation function has a particular form, it will often give me that form. I’ve learned to ask it questions in as neutral form as possible. This makes sense when you think of it as a machine that has been trained by reinforcement to essentially get “likes” from its user. It’s no wonder LLMs are such sycophants. Or since they’ve been trained on forums like Math Overflow and Reddit, they use the sort of language that gets lots of likes. I do wonder whether they sound so confident, because users are more likely to upvote an answer given with confidence. This leads to one of the most dangerous combinations: incompetence with over-confidence. That’s what makes it so plausible that Trump’s advisors were tricked into believing a formula given to them by an AI.

Being trained on forum data is probably what makes them so insufferable. We’re rewarding them with something akin to the dopamine hits that power the attention economy. They’re desperate for likes. They’ll start their answer with some platitude, and then “Let me explain…”, like the worst engagement farmers on X. I suppose this is why they thank me "for reaching out," and keep circling back… The problem is that they will get a reinforcing like for an answer that looks correct, while actually checking that it is correct takes a lot of time.

This to me is what makes these systems dangerous. They are being trained to be convincing rather than correct.

When it comes to straight maths and coding, the LLM’s answers can eventually be checked. That's because at some point, they’ll presumably be able to encode their answers in a proof verification system like LEAN, which will enable us to check whether their output is correct. At that point, the reinforcement learning feedback loop will lead to some impressive AI mathematicians. To me, that’s pretty exciting, even though there are all sorts of dangerous pitfalls lurking about.

But what about questions outside mathematics and coding, where we can’t verify their answers? Questions about physics, or governence. Since they project such authority, this aspect of LLMs is part of what feels so dangerous.

I'm interested in other researcher's experiences with AI for mathematics, so please do comment below. While I haven't yet found them particularly useful for solving math problems directly, I have found it useful to ask them to generate some Mathematica commands which I can use to check some intuition. Or to learn some bit of maths or physics which is far outside my area.

Update (April 5): While I am far more astounded at their progress, than disappointed at their performance, the fact that they are still pretty bad at math is confirmed by a few studies linked to in Gary Markus’s post:

Update (April 25th): The idea that training for engagement will lead to sychophantic AI has just received a lot more attention! https://openai.com/index/sycophancy-in-gpt-4o/

Next week, I hope to upload a post on why gravity is not a gauge theory, so do subscribe if you’re interested.

Your experiences match mine perfectly. Probably the most disconcerting ones for me is its enthusiastic encouragement when I've gone off track and the "miracle occurs here" moments when it is trying to match the results it has determined you want. It has no judgment when you're off the beaten track a bit. Two things I've found very useful, 1. Prompting it to number equations, that makes follow on questions much easier. 2, using it as a tool to create latex source for derivations, (GPT 4o & Grok) both do a fine job with very good accuracy, at least for my stuff...

Thinking out loud… It occurs to me there seems to be four "kingdoms" we apply Ai to: [1] Search engines for known facts; [2] Mathematical questions; [3] Art/Literature production; [4] Social questions.

The first two seem worthwhile, largely because of what you mentioned here about begin able to check the work. The third fascinates people but doesn't interest me at all When it comes to art or literature, I don't value something one can produce with a prompt rather than their own creative sweat and blood.

The fourth, at least in the foreseeable future, seems very, very dangerous to me.